Seismic Technology

Technological Advances Bringing New Capabilities To Seismic Data Acquisition

By David Monk

HOUSTON–Geophysical and geological data are the foundational information on which successful projects are built–the “ground truth” building blocks used to construct nearly every well in every field. They provide critical insights into structure, rock properties and in situ reservoir characteristics, and guide well placement, completion and production optimization strategies.

Seismic data acquisition is being challenged in different ways, depending on the application, whether a deepwater subsalt environment or a horizontal resource play or an enhanced oil recovery project onshore. Technology, likewise, is responding on a variety of fronts, including advances in broadband data recording, simultaneous sources, full-azimuth 3-D, higher channel counts, wireless systems, smaller and more self-sufficient receivers, and robotic autonomous nodes.

However, the overarching trend common to virtually every seismic survey is the need to acquire more and more data–more angles, more density, more channels, more frequencies, etc.–with ever-greater efficiency.

Some aspects of technology transcend the industry for which they were suggested. When Gordon Moore predicted that the number of integrated circuits on a board would double every year, I doubt he envisioned his “law” being applied to other science. It was not until several years later that inventor and futurist Ray Kurzweil suggested a key measurement of any technology was multiplied by a constant factor for each unit of time (e.g., doubling every year), rather than just being added to incrementally.

We can apply the same idea to seismic acquisition to understand (and predict) what will happen as we move forward. Specific examples of this phenomenon include land seismic channel counts doubling every 3.5 years and marine channel counts doubling every 4.5 years. Technology advances are linear on a log scale.

Technology adoption follows a pattern as well, described as an S curve. Development is slow initially as a concept is formed, followed by a period of rapid growth and development, and finally leveling as a technology matures and further developments are small. Rapid growth in adoption only happens once there is a widespread understanding of the value delivered by the innovation.

While not all companies are positioned to invest early in technology, they do need to stay abreast of trends and developments. Adopting the right technology early in the game can lead to a distinct competitive advantage, especially in highly competitive or high-cost operating domains such as unconventional resource plays, or deepwater and international projects. A number of technologies are almost certain to change the game in seismic acquisition over the next few years. Meanwhile, ongoing increases in computational power (again predicted by Moore’s Law) will enable processors and interpreters to extract more value from the data collected in the field.

Fuller Spectrum

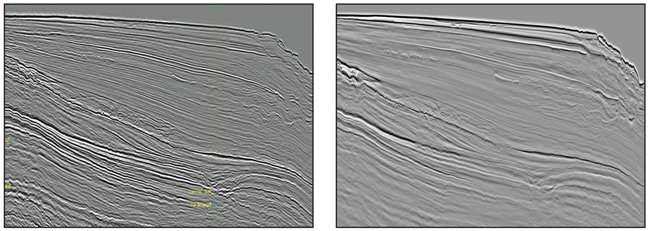

Significant research and development in seismic acquisition is taking place with both broadband and simultaneous-source methods. Many companies have benchmarked some of the numerous techniques proposed for acquiring extended bandwidth seismic over the past three or four years. Apache acquired test data from techniques for enhancing bandwidth offshore Australia in 2011 and 2012, and tied this with data from a conventional 3-D survey shot during the same period (Figure 1). In addition, Apache acquired the first commercial marine simultaneous-source survey around the same time in the same part of the world.

Broadband seismic attempts to capture the full spectrum of both high and low frequencies for improved-resolution imaging and data inversion, enabling a better understanding of rock properties in the subsurface. Recording the full range of frequencies provides higher-fidelity data for clearer images with significantly more detail of deep targets as well as shallow features.

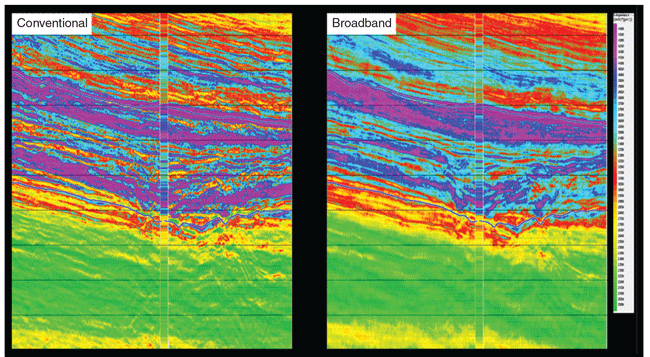

Moreover, the additional frequencies in the data allow a higher-fidelity inversion of seismic back to the underlying rock properties, and improved confidence in the ability to predict and match well data. Figure 2 shows the shear impedance attribute derived from conventional (left) versus broadband (right) datasets. Broadband data ties to well data are improved, and broadband data better correlate to geology. The result is more quantitative interpretation and higher confidence in rock properties away from drilled well locations.

When a source is activated in the water, not all the energy goes down. The fraction that goes up hits the surface and then is reflected downward so that it interferes either constructively or destructively as it travels down. Often referred to as “ghosting,” this has been a limiting factor to the recorded bandwidth of seismic data in marine acquisition.

To deal with ghosting offshore, surveys now include towing different types of streamers, or towing conventional streamers in different geometries. These strategies mitigate some of the problem caused by towing the streamers below the surface. Streamers that contain both pressure and velocity measurement devices have the potential to distinguish energy traveling upward from energy traveling downward, and to thereby “deghost” the recorded data.

Even more complex measurement systems are being deployed now, which measure not only pressure and velocity, but also record the gradients associated with the changing pressure field. Using this gradient information potentially enables 3-D reconstruction of both up-going and down-going wave fields at any location within the streamer array, and at any desired datum.

The ghost problem is less common onshore, where source and receiver are often both on the surface. However, if the source is below the surface, some frequencies are cancelled out. I expect innovations in both source and receiver to continue onshore as well as offshore.

Historically, seismic data have been used to interpret structures and potential hydrocarbon traps for exploration drilling. Today, geophysical data are being used to yield better understanding of rock properties ahead of the bit and to geosteer the drill path based on favorable rock property information, and not necessarily simply related to structure. Someday, seismic data may even provide sufficient information to accurately map commercially producible hydrocarbons before drilling or logging. Broadband data are essential to achieving that goal.

Just as there has been a constant increase in the number of recording channels active for each seismic shot acquired in the field, there has been a desire to increase the number (density) of shots. Simultaneous-source acquisition is a disruptive technology that has led to efficiently and economically acquiring much more long-offset, full-azimuth data. Every seismic shot is associated with a finite amount of time during which the earth response is recorded. As we add more shots that are required to acquire more data, more time is added also to the exploration effort, if shots are recorded sequentially. Onshore, every vibroseis shot shakes the ground for up to 30 seconds. With a sequential activation of sources, survey time can become excessive.

The solution onshore has been to allow multiple vibroseis units to shake at the same time in many places. Receivers listen continuously while shots are fired at multiple locations. Land-based, simultaneous source acquisition techniques have been around for a while and processors have learned how to effectively separate the data. The result is very high-density seismic data collected much more efficiently.

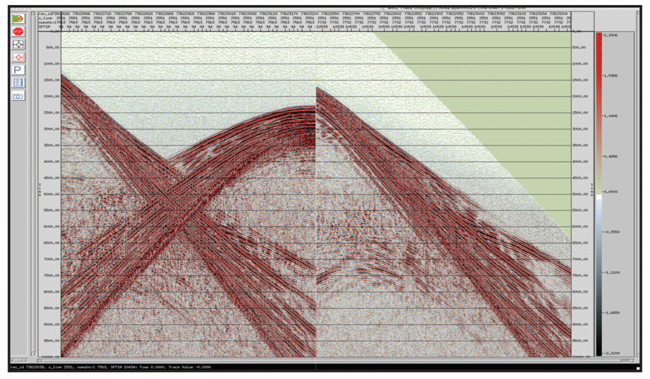

Offshore, early experiments have led to production with simultaneous-source surveying. The goal is to have multiple source boats shooting at the same time, with all data acquired in one pass. That has huge potential for acquiring more data in less time and at lower overall cost. Innovations have been focused on processing techniques for deblending or separating the interfering shots. Figure 3 shows marine simultaneous-source survey data from two source vessels both before and after separation.

Richer Azimuths

Advances in wide-azimuth and full-azimuth (FAZ) acquisition geometries have been driven by the extreme challenges of imaging at depth in subsalt plays. Full-azimuth data result in a better image for interpretation, and also can improve the understanding of fracture patterns, and reveal dips and geologic features unseen in limited-azimuth data. Having many directions of analysis can help if the subsurface structure is complicated.

While the most powerful benefit of full-azimuth seismic is in imaging highly complex geologic features, such as salt bodies and the sediments beneath them, high-fold, FAZ datasets also are being acquired in shale plays to recover in situ angle-domain reflectivity at depth for fracture/stress and geomechanical property analysis, such as determining natural fracture intensity and orientation.

Full-azimuth seismic data can be very expensive to acquire. In the marine environment, full-azimuth surveys using streamers must tow complicated paths, or use multiple vessels or shoot the survey multiple times. These techniques all imply additional cost, but the improved final image certainly can justify the price tag by reducing drilling and development risk, especially in high-cost deepwater projects.

Full-azimuth data acquisition is about 12 years into the adoption curve, and its application has expanded from imaging structures in challenging environments (subsalt) to imaging structures where the reservoir itself (not the geologic environment surrounding it) is complicated. This is often the case with unconventional reservoirs.

The potential benefit of FAZ in ultralow-permeability shale plays is the ability to accurately map the locations and orientations of microfractures. Operators then can design the best drilling and completion plan to exploit the natural fracture system. Azimuthal analysis to understand fracture patterns shows a great deal of promise.

Higher Channel Counts

As noted at the start of this article, the industry has demonstrated a voracious appetite for channel count. Twenty years ago, a “high channel count” survey might have had 1,000 live channels. Ten years ago it might have had 25,000. Today, surveys have channels counts in the hundreds of thousands, and I have no doubt there will be an onshore survey with 1 million live channels on the ground within five years.

In fact, there are already at least two crews with 200,000 channels active for each shot. The greater the number of channels, the more of the seismic wave field is recorded and the more noise is canceled out for higher densities, improved resolution, and imaging accuracy.

Integrated point-source/point-receiver land seismic systems allow contractors to acquire full-azimuth, broad bandwidth surveys with very high channel counts within manageable operational cycle times. Multicomponent and full-bandwidth receivers also increase required channel counts. In addition, increased spatial sampling and new components of measurement push up channel counts.

The logistics associated with deploying a 3-D survey with 1 million channels is likely to accelerate the adoption of wireless technology and autonomous nodes. FAZ data and higher folds add to the complexity and likelihood that channels will not be connected with cables. Wireless systems also remove some of the constraints on survey design geometries.

Data management will take on even greater importance as companies struggle to manage the massive volume of data each survey acquires. Acquiring 1 million data streams every 10 seconds creates a huge (albeit highly valuable) dataset.

Autonomous Nodes

Offshore, decoupling the source and receiver by using nodes allows the acquisition of long offsets for any and all azimuths. Bottom-referenced systems utilizing hydrophones and geophones have the capability to deghost the receiver for broadband data. Multiple source vessels acting independently and randomly reduce costs significantly.

The downside is that the process of deploying nodes can be slow, and speed equals cost. At this point, there must be significant technical benefit to having full-azimuth, long-offset data to justify its acquisition.

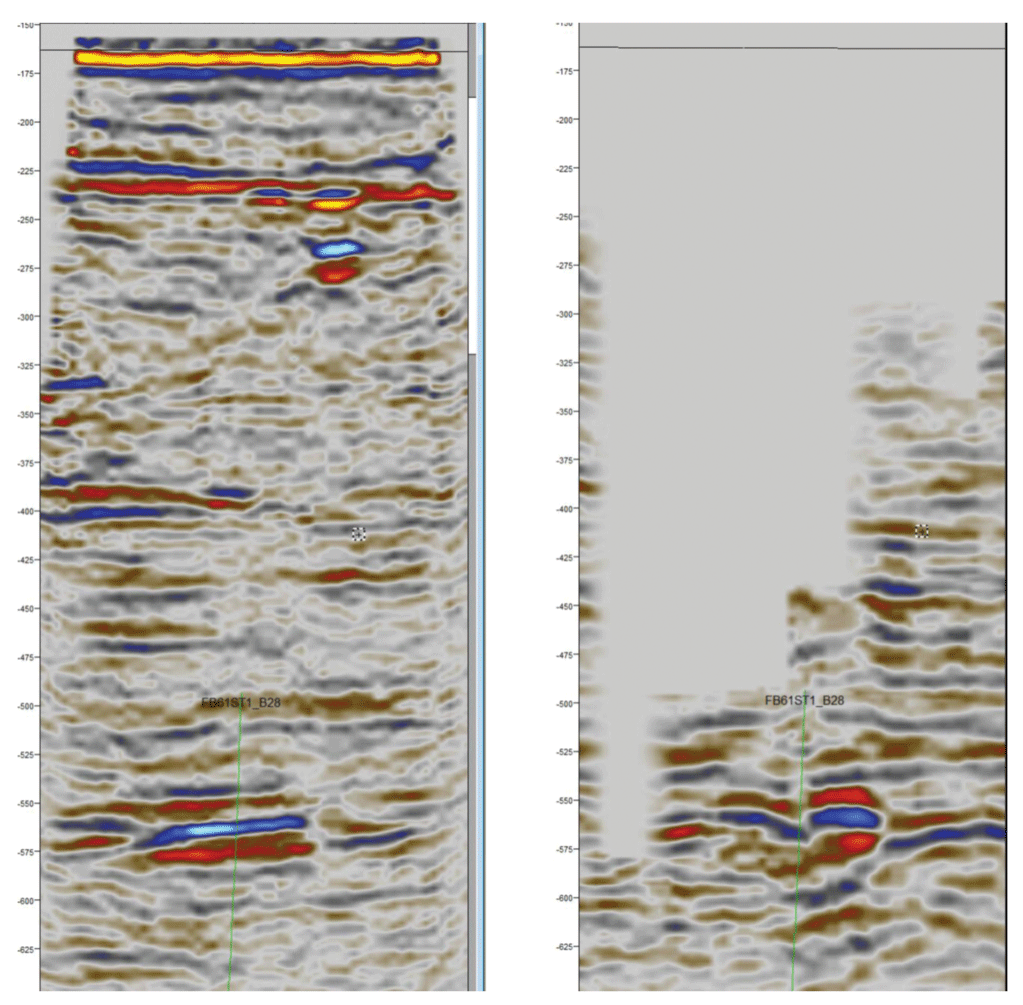

Node acquisition also enables data recording in situations where a conventional streamer survey would not be possible. Figure 4 shows sample data acquired beneath a platform using nodes (left) compared with undershooting 3-D (right). Autonomous nodes deployed by a remotely operated vehicle were placed very close to and even under platforms. A separate highly maneuverable vessel with a source was able get very close to these obstacles.

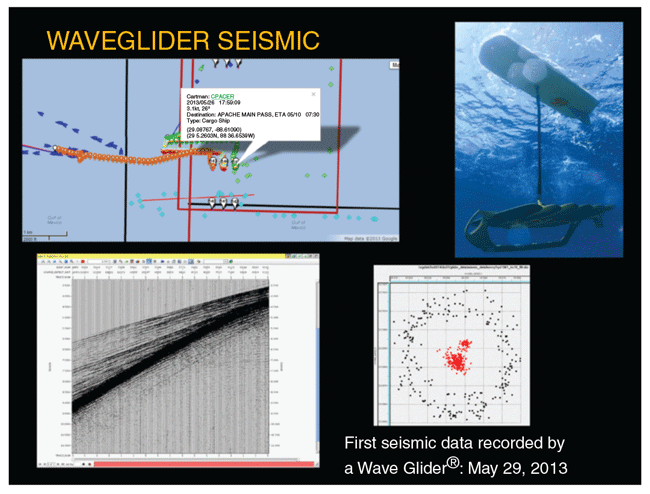

The next generation of autonomous marine nodes is likely to be independently powered and able to be positioned remotely. Development of powered underwater nodes is being undertaken, and tests have been conducted with surface Wave Gliders®. A Wave Glider is a surfboard-like unmanned device with a hybrid power system that generates propulsion mechanically using ocean wave motion and onboard solar panels to power recording and navigations systems.

A recent project in the Gulf of Mexico marked the first seismic data to be recorded using the technology. The completely self-sufficient ocean robots were commanded remotely to position and then acquire seismic data (Figure 5).

The possibilities with this kind of technology are intriguing as a new platform for recording seismic, providing the ability not only for extended autonomous data recording, but also for self-propulsion to navigate distant survey areas. In addition to the seismic surveying, the technology also is being used for many activities, including monitoring ocean currents and marine mammals, as well as monitoring chemical compositions of seawater.

Onshore, we have yet to see the use of fully automated, remotely controlled nodes. Conceptually, such devices simply could be scattered throughout the survey area where they would use global positioning to orient themselves and form a network. When the job was done and a command was issued, they could “walk” back to a common location to be picked up. While this may sound like science fiction, the military already uses similar devices as seismic sensors, scattering them from a helicopter to look acoustically for caves and tunnels.

Nodal technology onshore presents a new way of acquiring seismic data that has seen rapid adoption over the past five years Nodes will not necessarily have a dramatic impact on an operator’s ability to drill better wells everywhere, but there are going to be some parts of the world where data can be acquired that previously could not be.

Nodes also permit irregular sampling, and remove the requirement for regular spacing that constrains receivers connected by cable, thereby allowing surveys to go around man-made and natural barriers or obstructions both on land and in water.

Mathematical developments in the area of “compressive sensing” suggest that random sampling may allow us to overcome some of the restrictions we have become accustomed to in processing regularly sampled data. If we can, indeed, overcome the limits of aliasing imposed by regular samples, then compressive sensing has a bright future in seismic processing.

One of the challenges that comes with using massive numbers of autonomous nodes is power management. Think of the coordination required to manage 200,000 autonomous nodes, each with its own battery that needs recharging every 30-60 days. It is likely that innovation in power management will come from outside the oil and gas industry, perhaps from the cellphone or electric car industries.

Lithium ion batteries are likely to be replaced by something that is a lot more efficient or lasts longer, perhaps with a single charge lasting a year. Fuel cells may hold potential, but current technology is expensive because of platinum content.

Fiber Optics

Fiber optics present an attractive option for use as seismic receivers because measurements potentially can be made at any point along the fiber. Theoretically, many measurements could be made in a small length of fiber optic cable. Fiber optic detectors are already in use down hole for vertical seismic profiling work and in permanently embedded arrays, and testing of continuous fiber detectors on the surface has started to be reported.

Downhole fiber systems are often in place for another purpose, making interrogation for seismic data a secondary function. These are early days for fiber optics, so it may be a decade before it is in common use, but optical seismic technology has proven very effective in permanently installed 4-D reservoir monitoring tests.

Processing Trends

Doubling the number of active receivers at the same time the number of sources is doubled means the number of seismic traces in each square of 3-D seismic is increasing at an even faster rate. The “richness” of the acquired data, the sheer volumes of raw data, and the demand for higher-order processing algorithms such as full waveform inversion present new challenges to seismic processing. Fortunately, increased computing capabilities continue to negate the impact of increasing volumes of data, since Moore’s Law applies in this area as well, and continue to make data processing more time and cost efficient.

Two clear trends appear to be emerging in the seismic processing sector. First, well-understood processes such as time and depth migrations will become more accurate. The geophysicist has long known how to solve the imaging problem, but has not been able to afford it (computationally). As a result, past developments have been focused on optimizing for cost, sacrificing precision.

The computational requirements often make it necessary still to take shortcuts when running migration processing steps. It is unusual to run a full range of frequencies with reverse time migration because of the associated cost. However, as computer power increases, processes will get more efficient, fewer shortcuts will be needed, and the output will get closer to the truth.

Second, there is a desire to extract more and different information from a larger set of data. Interpretation has become more than just about structure, because the vast quantity of acquired data can be studied in new and different ways. It has complicated the job of processing and interpretation, but ultimately leads to better performing wells and lower risk. Structure, rock properties and fracture details are all targets for interpreting the processed data.

When a structural interpretation of the subsurface was all that was available, operators drilled the “bumps” and hoped they were full of hydrocarbons. Today, seismic data can reveal crucial rock properties. Is the rock hard? Is it soft? Does it contain fluids? Is that fluid hydrocarbon, and what type and in what quantities? Operators can start to understand a lot of basic information on sand quality, rock properties, and the presence or absence of fluids before they spud a well.

In some cases, new processing methodologies that are likely to give significant benefits drive changes in how data are acquired. Conversely, new acquisition techniques can drive changes in processing. In some areas, it is not uncommon to have been acquiring multicomponent, shear-wave data for several years, but processing techniques are still evolving and often are not yet in place to extract all the information from that data. The data are waiting for processing algorithms to catch up.

Data Life Cycle

The life cycle for extracting meaningful information from a seismic dataset is typically only four to five years. By then, processing techniques will have improved to the point where it may be appropriate to reprocess the data for re-examination. After a decade has passed, acquisition technology has moved on so much that it often is better to acquire new data that are significantly better.

New data may contain 10-fold the information, or they may have better bandwidth. It does not matter how much processing has improved, if the frequencies, offsets or azimuthal distribution are not present in the raw data.

Sometimes a large dataset or a multiclient speculative survey covering a vast area may be worth reprocessing to identify targets for new acquisition programs. It can be difficult to process a very large area in a way that highlights detail in a small part of the survey. The best approach may be to use the large processed volume to get an initial structural interpretation, and then hone in on the details of an area. Reprocessing the data can yield subtle features and more information about rock properties.

While seismic acquisition capabilities are advancing rapidly in many areas, the timeline for new seismic acquisition techniques and technologies from development to full adoption can be long. It’s not atypical for a new idea to take 25 years before it matures into a regularly used commercial approach to exploration. To be successful in finding discoveries and optimizing the value of existing assets, oil and gas companies need to track and utilize new technology early in its life. It is a long-term issue for a long-term business need.

The challenge for operators is to stay informed about new technologies and constantly test the potential value in their operations. Usually, it is a question of “when” and not “if” technologies will be adopted. At the end of the day, the goal is to have more successful wells. By monitoring developments in seismic acquisition and other areas, operators have the best chance of picking the right technology at the right time to achieve that goal.

DAVID MONK is worldwide director of geophysics and distinguished adviser at Apache Corp. in Houston, responsible for seismic activity, acquisition and processing in areas such as the United States, Australia, Canada, Egypt and the North Sea. He is the immediate past president of the Society of Exploration Geophysicists. Monk has spent his 35-year professional career working in various aspects of seismic acquisition and processing in most parts of the world, including serving at Apache for the past 15 years. Monk has received “best paper” awards from the SEG and the Canadian SEG, and is a recipient of the Hagedoorn Award from the European Association of Exploration Geophysics. He is a life member of SEG and an honorary member of the Geophysical Society of Houston. Monk holds a B.S. and a Ph.D. in physics from the University of Nottingham.

For other great articles about exploration, drilling, completions and production, subscribe to The American Oil & Gas Reporter and bookmark www.aogr.com.