Modular, Scalable AI Transforms Operations From Reactive To Proactive

By Vikram Jayaram, Annorah Lewis, Ryan Mercer and Abhishek Kothari

In the world of medicine, mRNA has revolutionized treatment. No longer one-size-fits-all, therapies can be tailored to a patient’s unique genetics, physiology, and disease profile. A diabetic athlete and a sedentary senior with the same condition no longer receive identical care; their treatments are now precise, dynamic, and deeply personal.

Oil field assets, too, are like patients—each compressor, pump, or pipeline carries its own DNA: the vintage of installation, history of failures, the environment it operates in, and the abuse it has taken. Yet, today, we treat them with broad strokes, relying on generic maintenance schedules and cookie-cutter analytics.

In the past, that made sense, because it would be impossible for even the most efficient maintenance teams to develop individualized plans for all but a tiny fraction of their assets. But just as mRNA has unlocked personalized healing in medicine, AI can rewrite the standard of care for machines. Instead of generalizing, AI can listen to, learn from, and adapt to each asset’s history and make recommendations based on actual conditions rather than rules of thumb.

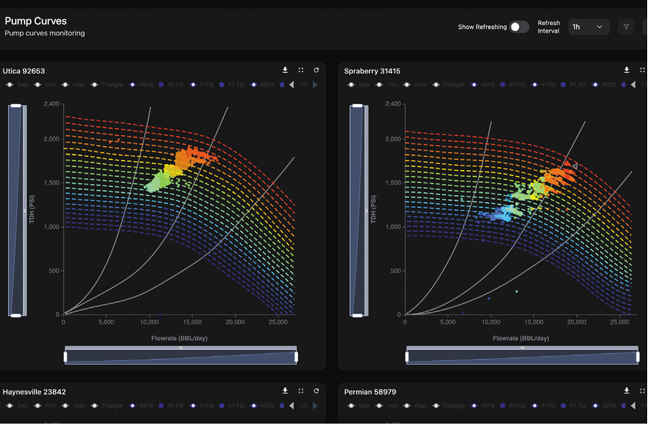

Imagine a control room where AI learns from every pump cycle, sees degradation before it hits, and adapts pumping policy dynamically, without requiring hardware swaps or guesswork. For operators facing volatile energy prices, field complexity, sustainability expectations, and workforce constraints, this intelligent control room would have a huge impact on operating costs, uptime and production.

Indeed, predictive AI that is built on engineering first principles has cut energy costs by as much as 40%. At the same time, it is reshaping how smart operators manage artificial lift equipment, compressors and saltwater disposal sites.

By pairing modular digital twins with transparent, KPI-based intelligence, new AI tools enable real-time optimization without opaque algorithms. For executives seeking faster returns on investment, improved uptime, and scalable automation, these tools offer a practical, proven framework that is ready to deploy.

Grounding AI With Physics

The core tenant of that framework is “first principles AI,” an approach that combines the fundamentals of engineering physics with advanced machine learning techniques. Instead of relying solely on pattern recognition or black-box models, AI systems that follow first principles deconstruct physical processes into their core drivers, such as mass balance, thermodynamic constraints, and mechanical relationships, then use this understanding to guide intelligent optimization.

Physics-based AI models have several advantages. Because the models can explain how they reach their conclusions by referencing first principles, they are easier for users to trust than systems that depend primarily on statistics. The models also generalize more easily across asset types, a valuable trait for users who need actionable results across a diverse portfolio.

AI algorithms for monitoring and optimizing downhole or surface pumps can deliver significant savings by reducing energy consumption and spotting early signs of issues. For one saltwater disposal well operator, these benefits helped shrink the cost per barrel by more than 10%.

Such models can support a far more efficient control paradigm. Today, most SCADA systems offer fragmented, reactive insights. Engineers must manually interpret patterns, often only after inefficiencies or failures have already occurred. But with AI, machines can process multivariate data in real time, identify anomalies, and adapt operations based on economic and physical constraints. The result is a supervisory control layer that supports better, faster, and more consistent decisions.

As a case in point, consider equipment efficiency. In many areas, including parts of Texas and Oklahoma, pumps’ energy consumption is not only a cost issue, but also an operational constraint and a lever for sustainability. Recognizing that reality, a major midstream operator responsible for dozens of produced water injection sites began looking for ways to reduce electricity consumption without compromising throughput or volume commitments.

The midstream company deployed a first-principles-based AI system focused on reducing kWh per barrel and cost per barrel while delivering clear recommendations to field operations teams. The system uncovered high-impact opportunities by:

- Breaking down pump inefficiencies into fundamental energy drivers;

- Visualizing site-level key performance indicators with real-time trend comparisons; and

- Recommending frequency adjustments based on daily flow variability.

Initial results included:

- 10-14% reductions in the cost per barrel;

- Energy savings as high as 40% at select sites; and

- Rapid margin improvements and measurable cost savings across the network.

The deployment also demonstrated that with the right approach, AI can be deployed at scale.

Achieving Scale

For AI to offer practical solutions to the diverse and ever-changing challenges in the upstream and midstream space, it needs to be customizable at a reasonable cost. Only the most significant projects could justify AI if deploying it required developing tools for aggregating data from sensors, machines and enterprise systems, training and validating custom AI models, and writing bespoke software to present those models’ insights in a useful way.

Fortunately, the best AI architectures support modularization. Instead of starting from scratch each time companies want to solve a new problem, AI experts can reuse templates created for previous tasks. These templates are flexible enough that they act like Legos, where the same building blocks can construct everything from a house to a skyscraper.

Knowing which modules to use and linking them together in the right way takes some skill, but it is easy enough that projects can be piloted with relative speed. It’s also remarkably flexible. The same architecture that helps midstream companies optimize pumps’ energy consumption also underlies programs that boost artificial lift systems’ effectiveness or keep deepwater compressors running as long as possible.

One advantage of that architecture is its ability to create digital twins of equipment. Unlike the digital twins usually seen in the oil field, which are often limited to static visualizations or post-hoc simulations, these AI-guided twins offer real-time information and predictions that are continuously informed by SCADA and historian data, grounded in physics, and calibrated to site-specific behaviors. Because they exist to help users make the right calls, the predictions consider economic factors.

Each digital twin is created from a template that captures the DNA of a field asset, including its flow behavior, motor response, historic maintenance records, environmental context, and load conditions. These templates allow for rapid deployment across similar asset types while adapting to local variations, enabling a modular and scalable twin architecture.

Once instantiated, each twin does more than reflect physical operations. It becomes a live simulation and decision-support engine, capable of answering operational questions in real time, such as:

- How should pump frequency adjust given the latest flow volumes, reservoir drawdown, and power pricing?

- Where is performance likely to degrade based on cumulative stress or wear indicators?

- Which frequency or runtime policies will deliver optimal per-barrel margin, given throughput constraints?

Operators can test thousands of control scenarios virtually before making field-level changes, reducing risk, improving uptime, and accelerating time to insight.

Expanding Use Cases

The same template-driven architecture is already applied to:

- ESP systems, where it spots early warning signs by looking at silt patterns;

- Compressor units, where it identifies skid-level inefficiencies by comparing current conditions to dynamic KPI baselines; and

- Pipelines, where it detects leaks by mapping deviations in flow pressures.

Given the costs associated with offshore work, some of the most compelling applications for modular, first principles AI involve deepwater wells. To maximize these wells’ economics, operators must manage extreme pressures, unpredictable conditions, varied geologic responses, and equipment failure risks.

Of these factors, equipment failure is the one people have the most control over. Unfortunately, conventional approaches to preventing failure rely on post-hoc manual analysis after periodic data collection. The gaps between analyses, as well as limited integration between the surface and subsurface, mean that maintenance and operation decisions often end up being reactive.

This situation is exacerbated by the complexity of the data that is available. For example, acoustic sand detectors may be deployed on high-pressure, high-temperature wells to monitor sand buildup. These devices are plagued by noise and sensitivity issues. Even with filtering, they may not distinguish between sand and ambient interference, especially near chokes.

AI excels at dealing with imperfect data. By using it to mine acoustic sand detection recordings, companies can detect early signs of sand production leading to equipment wear and production loss. These insights enable predictive maintenance and automated control, reducing costly interventions and manual oversight.

Careful Deployment

Such subsea automation does not happen overnight. While AI that follows first principles and displays current digital twins is easier for engineers to trust than blind models that depend entirely on statistical inferences, engineers still want to validate the results. With that in mind, we generally recommend adopting AI in three phases.

Modular, physics-based AI systems can be configured to tackle a variety of applications. Because they follow the scientific first principles that govern how the world works, these models adapt to changing conditions or new environments with relative ease. By pairing that foundation with digital twins representing equipment’s current state, they produce recommendations that engineers comprehend and trust.

During the first stage, the system focuses on detecting anomalies, diagnosing the cause, and issuing alerts. In the second stage, it will augment those alerts with recommendations that identify optimal control adjustments, such as changes to set points, as well as predictions about those changes’ effects.

Once the system has demonstrated that it can accurately diagnose problems and evaluate solutions, companies usually feel comfortable moving to the third stage, partial autonomy. With supervisory approval, the platform executes real-time, closed-loop adjustments, turning insight into action while preserving human oversight.

This progression ensures that human expertise remains central, while allowing the AI system to accelerate decision-making and continuously improve with each operational cycle.

Significant Impact

Whether it is in the first stage of the adoption curve or the last, physics-based AI can transform maintenance from a cost center into a revenue lever. Instead of waiting for equipment to degrade and reacting, its users can predict when degradation will occur and weigh the cost of maintenance against the impact on revenue.

By analyzing pressure, flow, and runtime dynamics before and after service events, AI can model how each intervention affects asset lifespan and daily throughput. These insights are embedded into the digital twin, forecasting the most cost-effective intervention window.

In early deployments, such precisely-timed maintenance—scheduled not by calendar, but by data-driven thresholds—has lifted daily revenue as much as 5%. At scale, this yields substantial improvements in field-wide financial performance.

Saltwater disposal wells illustrate how much even small improvements in performance matter when they can be realized across a company’s portfolio. For a single SWD site, a 1% increase in revenue can contribute $40,000 to the bottom line. Replicate that increase across 250 sites, and it adds up to $10 million.

AI’s combination of speed, precision and scalability is why the future of oil field operations will no longer be driven by reactive decisions or fragmented tools. It will be led by AI-powered control rooms that think ahead, continuously learn, and push every asset to perform at its best.

VIKRAM JAYARAM is the chief technology officer and founder of Neuralix Inc., which uses physics-based AI to solve problems for the energy and manufacturing sectors. He has more than 20 years of experience applying machine learning in industry and academia. As head of the data science and advanced analytics group at Pioneer Natural Resources, Jayaram led a multidisciplinary team that developed solutions for real-time command centers. Earlier in his career, he worked as a senior research scientist at Global Geophysical Services, where he improved seismic processing algorithms. Jayaram holds an M.S. and a Ph.D. in electrical engineering from the University of Texas at El Paso, and a post-doctoral fellowship from M.D. Anderson Cancer Center.

ANNORAH LEWIS is a business operations and product leader at Neuralix Inc., where she oversees the company’s go-to-market strategies and helps apply modern technology to industry challenges. Lewis has supported efforts related to predictive maintenance, emissions mitigation and asset optimization. She earned a B.S. in computer science from Virginia Tech and studied business operations, economics and accounting while completing Harvard Business School’s online program.

RYAN MERCER is a staff data scientist at Neuralix, where he helps energy and manufacturing companies improve their operations using AI. His past projects include predicting failures in saltwater disposal well systems, optimizing horizontal pumps’ energy consumption and pinpointing methane leaks. Before joining Neuralix, Mercer did AI-focused research internships at Form Energy, Toyota North America and Visa. He holds a B.S. in electrical engineering from the University of California, Los Angeles, and a Ph.D. in computer science from the University of California, Riverside.

ABHISHEK KOTHARI is a senior software engineer at Neuralix, where he excels in industrial data and applies a deep understanding of processes to solution engineering and product development for upstream and midstream operators. Having pursued an M.S. in computer science from the University of Florida, he has prior experience as an engineer at Oracle and as a consultant at Deloitte.

For other great articles about exploration, drilling, completions and production, subscribe to The American Oil & Gas Reporter and bookmark www.aogr.com.